The first module of my "Digital Effects" course is called Take One Painting. This is a project that is used to teach us the basics of CG, Compositing, (via set extension) and working on set (placing tracking markers, VFX photography, set measurements, data wrangling). It also gave us the opportunity to work with the cinematography and production design courses.

The Brief

This is the entire plot behind the Take One Painting brief:

--> The heads of department choose a period painting

--> The production designers get shown the painting and are told to design a set based on the painting.

--> All 8 production designers design the set, and the best one is chosen to be used for the shoot.

--> The production designers then build the set and source the costumes.

--> The cinematographers come along, once the set is built, setup the camera in the same POV as that of the painter. They also setup and light the green screen.

--> We come when everything is ready perform all the necessary measurements, place all the tracking markers, record all the camera data, and take all necessary VFX photography.

--> Once the shoot is complete, we spend the next 6 months being taught Maya, Nuke, PFTrack and

earning any other relevant software which will help in achieving a photorealistic set extension.

In this post I wish to take you through the same journey that I went through while learning about the entire port production pipeline. I am not going to go into very much detail regarding what I learn but I will be treating this as a progress report, and going over any major problems I faced.

The chosen painting this year was Hospital at Granada by John Singer Sargent, shown below:

Directly below are 2 stills from the tracking shot that was filmed:

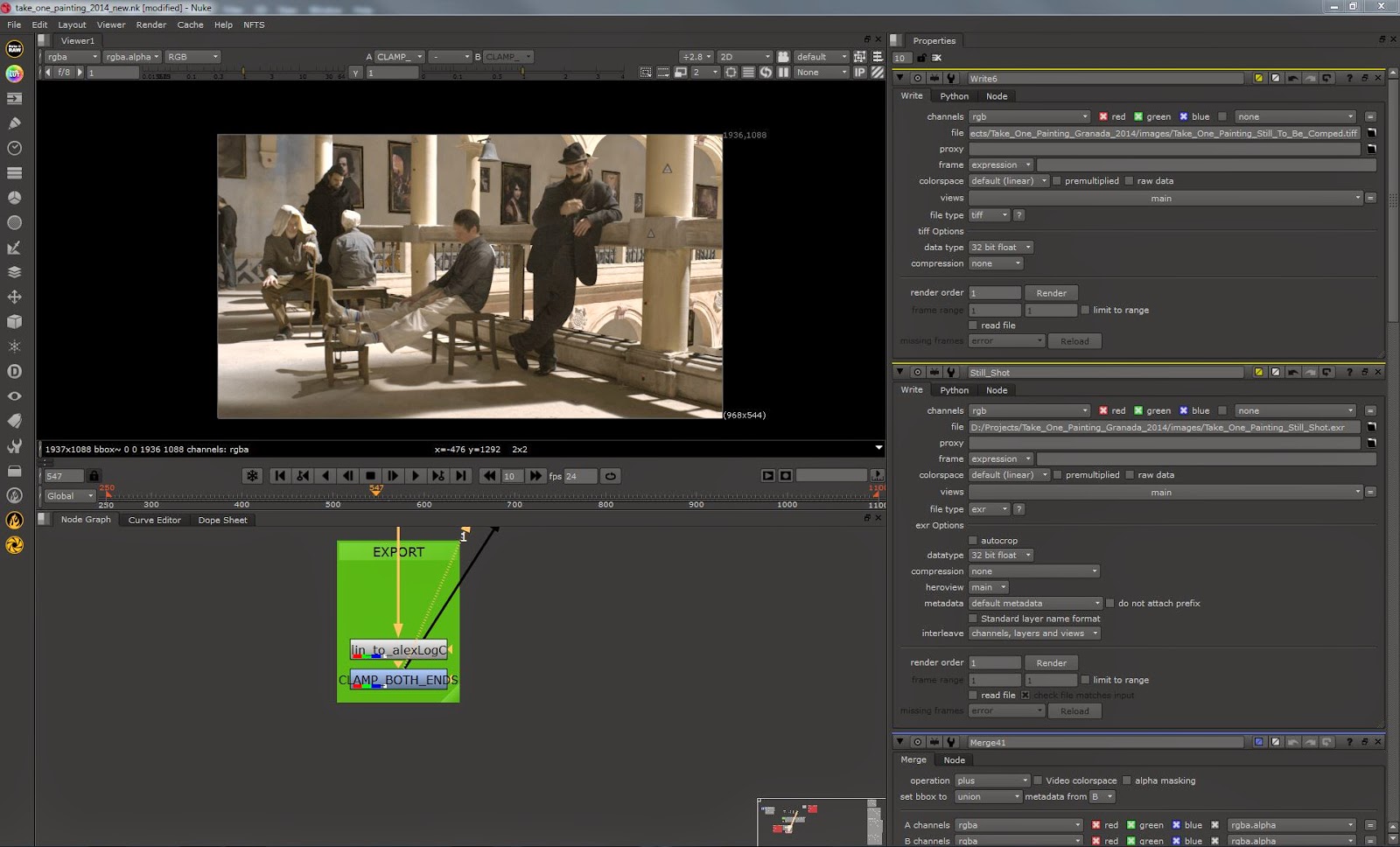

We were also told to work on a locked off shot of the set, which was supposed to closely match the painting (with some added actions from the actors). Below is the still plate that I used, as well as the end result:

The chosen painting this year was Hospital at Granada by John Singer Sargent, shown below:

Directly below are 2 stills from the tracking shot that was filmed:

We were also told to work on a locked off shot of the set, which was supposed to closely match the painting (with some added actions from the actors). Below is the still plate that I used, as well as the end result:

Tracking

All my tracking was done in PFTrack. I made a few tests in MatchMover before hand but went with something that is used professionally to get my final track.

The problem that I had with this footage was establishing a good camera solve. The reason for this problem was because I knew that the camera lens was set to 25mm, but I did not know what the EXACT focal length was. We did shoot a checkerboard grid with the same lens, however the actual lens grid was warped and old so using it would have actually introduced more errors.

I had no access to 3D equalizer for this project so I was not able to use its ability to detect the focal length of the camera. Instead what I did (shown in the image below), is that I manually entered different focal lengths (from 25.00mm to 26.7mm) and used the one that gave me the best result. The Estimate Focal node was not a very accurate way of estimating the focal length as it is all done by eye (exactly like the Orient Scene node below) which I find to be a pretty stupid idea.

Below are 2 images showing what my camera solve looked like using a focal length of 25mm and then 25.6mm. The left most group of points is supposed to represent the back wall of the set. In the 25mm solve these points are almost randomly scattered. While the 25.6mm gave me a pretty solid back wall (it is quite hard to see in the image actually).

25mm

25.6mm

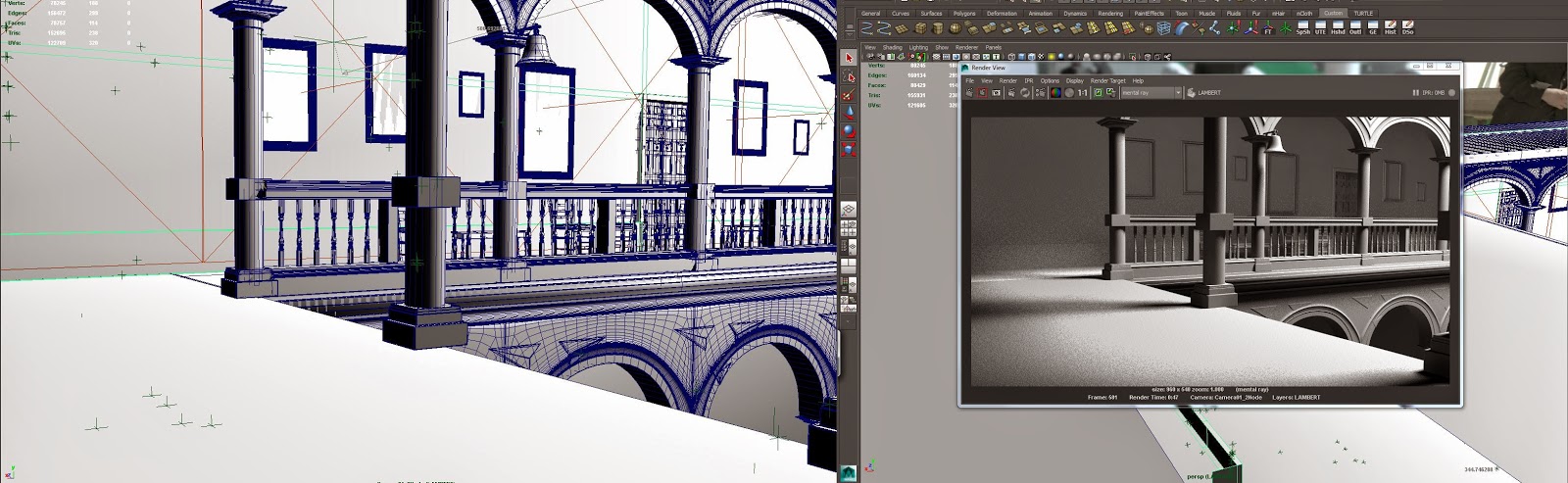

Modelling and Texturing

I don't have many images for this stage because the CG is simply too far away to require any kind of really detailed, close-up friendly textures. Also (as you can see) the models are fairly low res as there would simply be too many things in front of them (the actors on stage, the banisters that connect the pillars etc.) for the detail to be seen.

A simple wheelchair I modeled to add into the background. Low poly and simple textures as it will only be seen temporarily, and it will be placed behind various other objects/actors.

Another simple prop I made. Once again, it was low poly and the textures were not super detailed. In the image of the chair 'cushion' you can see that the texture has various problems such as stretching and not being properly matched with the geometry. However none of this matters as the texture will look completely fine from far away (and the chair is, indeed, very far from the camera).

Below is a low poly detail that would be added between each of the columns. The two images directly below are there to show that my texturing was done using a combination of Photoshop and Mudbox and also CrazyBump for Normal maps.

Nothing in my scene was subdivided at render time. The arches are the only thing that has any real subdivisions and that is because of the way I wanted to model and texture each arch. In the image below, what you are seeing is extra edge loops added in in order to create more deformation. The scene might be geometric and straight, but it is man made, so nothing in it should ever be perfectly straight. The extra edge loops I added allowed me to add this extra level of deformation. This was done using the sculpt tool and in some cases, the selection tool with soft selection turned on.

As some of you may have noticed, based on the images above, I have used a combination of Maya and 3DS Max for the modelling of my scene. This led to a series of problems regarding imports and exports and file types. I will not go into too much detail but basically I needed to export my assets from Max to Maya, but I needed them to be instances. An OBJ file would not give me instanced versions of an object but it would maintain the correct scale. An OBJ would also sometimes place my exported item in the wrong place in my scene. An FBX file, on the other hand, would maintain instances, but in some cases it would also offset my models. Another problem that would occur is that my models would triangulate even though I had disabled triangulation. I found that for some reason I had to make sure I add an Edit Poly modifier to every object at the top of their modifier stack (in Max) and this would disable the triangulation upon import into Maya. The OBJ file would not have this problem, however, it had the other drawbacks that I mentioned.

Lighting

Below are screen captures of different lighting test results. After trying to match the shadow perspective and quality/hardness, I went back and forth from Maya to Nuke in order to compare the luminance values of my CG compared to the live action.

Trying to match the luminance difference between the shadowed areas and the ares fully exposed to the sun. I was sampling the actual footage in Nuke and then sampling the same light and shadow areas in the render.

Rendering

V-Ray was used for the rendering process. I had never used V-Ray before and thought that this would be a great opportunity to learn it. Below are all the render passes I rendered out. The multiple shadow passes were rendered out because I was having problems in the beginning with the default shadows pass. The Raw passes were also rendered out as a backup, in case I was having problems with their non-raw counterparts. One pass which I should have rendered is the Light Select, however I think that my multimatte passes, combined with my lighting and GI pass, might give me enough flexibility to not have to render out the Light Select.

Comparing my beauty pass with my comped render passes.

The CG roughly comped in with the live action.

No comments:

Post a Comment